What is the Host Memory Buffer Technology in SSD?

Solid-State Drives (SSDs) have become the go-to storage solution for their speed, reliability, and durability. However, as demands for faster data processing increase, SSDs face challenges in maintaining optimal performance. This is where Host Memory Buffer (HMB) technology enters the scene, revolutionizing SSD performance by utilizing system memory as a cache. In this article, I’ll explore HMB, how it improves SSD performance, and its benefits.

What is Host Memory Buffer (HMB) technology

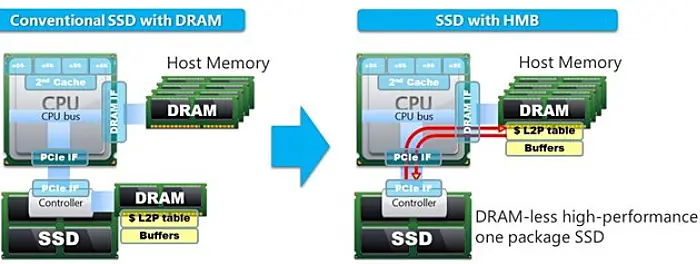

Host Memory Buffer (HMB) is a technology that allows an SSD to utilize a portion of the device’s system memory as a cache. It solves the challenge of maintaining high-speed read and write operations without relying on dedicated Dynamic Random Access Memory (DRAM) caches. HMB optimizes data access and improves overall SSD performance by leveraging the system memory.

How does HMB improve SSD performance?

HMB enhances SSD performance in several ways.

- First, it decreases latency. In traditional SSDs, data written to the drive must first pass through an onboard DRAM cache. With HMB, data is written directly to the system’s DRAM, eliminating the extra step and reducing latency.

- Second, HMB increases the rate of data transfers. While SSDs are already much faster than hard disk drives (HDDs), using system memory as a cache further improves these speeds.

- Third, HMB minimizes write amplification. Write amplification is a phenomenon in SSDs where the actual amount of physical information written is more than the logical amount intended to be written. This is caused by the need for SSDs to erase data blocks before they can write new data. By using system memory, HMB can better manage data placement, reducing the need for unnecessary data block erasures and thus decreasing write amplification.

- Lastly, HMB enhances durability. With reduced write amplification, SSDs experience fewer write cycles, prolonging their lifespan. Additionally, because HMB requires no additional hardware, it potentially reduces mechanical failures associated with onboard DRAM on SSDs.

In essence, HMB technology allows SSDs to reap all the benefits of having an onboard DRAM cache without the associated costs and potential reliability issues. It is a significant leap forward in SSD technology that is set to take data storage performance to the next level.

How HMB Differs from DRAM Cache

SSDs, in most cases, come with DRAM for caching. If you want to know more about DRAM SSDs, refer DRAM SSD vs DRAM Less SSD – A Comprehensive Guide.

The DRAM is usually housed in external packages, separate from the controller. These packages can be standard DDR3 or DDR4 or a low-power variant of DRAM to minimize power consumption. They are similar to the memory sticks found in your system. However, for SSD usage, low latency is more crucial than high bandwidth, and DRAM offers significantly faster access for quick look-ups compared to flash memory. Controllers also have a small amount of faster but costlier SRAM that can be utilized for caching.

DRAM serves multiple purposes in storage systems. While it can be utilized as a write cache, its primary role is to function as a metadata cache for SSDs. Metadata, which refers to information about data, encompasses mapping, addressing, and translation between physical and logical data locations. This crucially bridges the gap between the storage and file systems. To ensure fast access, metadata is stored in a Lookup Table (LUT), enabling rapid retrieval.

Additionally, metadata includes wear leveling information, data age, and more. The most frequently and recently used metadata is stored in DRAM, following a Least Recently Used (LRU) caching policy. Moreover, the SSD retains a complete copy of the metadata in the system-reserved area of the NAND flash for committed data. DRAM is vital in storage systems by enhancing storage performance and data management.

The Host Memory Buffer (HMB) is a feature of the NVMe specification that allows a DRAM-less drive to utilize a portion of system memory for caching. While the allocated memory is typically in the range of tens of megabytes, it is sufficient for normal usage. Modern SSDs usually allocate 64MB by default, with a maximum of 100MB under Windows. However, it is possible to allocate a larger amount if desired.

It’s important to note that memory allocation does not necessarily indicate active usage and the operating system may reclaim the allocated memory if needed. To determine if HMB is enabled, you can use utilities for testing or check using nvme-cli with the Get-Feature command and Feature Identifier (FID) 0Dh.

While HMB may not match the effectiveness of dedicated DRAM, modern drives can still deliver high performance without it. It’s important to note that DRAM-less drives may have some drawbacks, such as increased write amplification, reduced endurance, and weaker sustained performance. Additionally, background maintenance can result in higher overhead. These concerns are particularly relevant for SATA and QLC drives. However, it’s worth acknowledging that these drives continuously improve, thanks to intelligent controllers.

Moreover, NVMe drives benefit from HMB, which pairs well with more affordable drives since they typically require less mapping headroom. Discover how modern drives can optimize performance without the need for dedicated DRAM. Learn about the drawbacks of DRAM-less drives and how intelligent controllers improve SATA and QLC drives. Explore the benefits of NVMe drives with HMB for enhanced performance and affordability.

Q and A

1) Is HMB as good as DRAM?

While HMB undoubtedly enhances the performance of DRAM-less drives, it’s important to note that it may not be as efficient as dedicated DRAM. Even without DRAM, modern drives can deliver impressive performance thanks to technological advances and smarter controllers. However, there are certain drawbacks associated with DRAM-less drives. For instance, they may experience higher write amplification, reducing endurance. Furthermore, these drives may display weaker sustained performance, and the overhead for background maintenance could be higher. Despite these potential limitations, the continuous improvement of these drives, particularly in the realm of intelligent controllers, is promising for the future of SSD technology.

2) Is HMB good for SSD?

HMB is indeed beneficial for SSDs, especially when considering the cost-performance ratio. By moving the Logical to Physical (L2P) mapping tables to the main system memory, HMB SSDs can leverage the advantages of DRAM-less SSDs while retaining a substantial proportion of the performance associated with SSDs equipped with onboard DRAM. This means that HMB SSDs can deliver high-speed data access and improved performance at a fraction of the cost. This unique attribute makes HMB a valuable feature in the SSD technology landscape, demonstrating a promising pathway toward cost-effective and high-performance data storage solutions.